Are you looking for a needle in a haystack?

Data mining allows to explore and analyze a wide quantity of data stored in a database with the goal to extract precious and unknown informations which are implied on data and they might be useful for making strategic decisions.

Data mining techniques are used to discover correlations between data (assosacition rules), to create classifier for predicting a variable’s value (decision tree, neural networks) or to organize unknown data in groups with similar features (clustering).

Classification

Classification consists of assigning a class label to a set of unclassified cases.

There are two types of classificatons:

- Supervised Classification

- Unsupervised Classficiation

A classificator based on a supervised learning algorithm builds a model in order to predict a value of an attribute called “class label“. This means that the algorithm implemented on the classificator has to learn how to predict a value of an attribute and this is accomplished by processing a data set in which the “right answers” were given. This data set used during the learnig process is called “training set“.

Therefore the input of the classificator is a training set, a set of multiple records. Each record is tagged with a class label. The class label, thus, represents the record’s category. It turns out that with this approach the set of possible classes is known in advance.

The output of the classifier is a model that given a record without a label, it is able to assign at the new record the class label with the maximum accurancy.

How to understand if the classifier is working properly? In other words, how to validate the output model?

In order to validate the model, a subset of the training set in which the class label has been intentionally removed is used. This subset is called “test set“.

To sum up, the classifier builds a model from the training set in which each record has a special class attribute pre-assigned. Then, to verify if the classifier predicts the right record class is used a test set. It’s a straightforward process because the classifier has to compare the predicted class attribute with the known one.

The term accurancy refers to the total number of records classified correctly with reference to the total number of classified record.

There are several techniques used to validate a model: confusion matrix, cost matrix and cost-sensitive measures such as precision/recall measure.

Application Fields

- Direct Marketing: the marketing team would promote a new smartphone and the would find potential customers

- Customer Attrition

- Text Analysis

- Loans

- Fault detections

- Classifying credit card transactions as legitimate or fraudulent

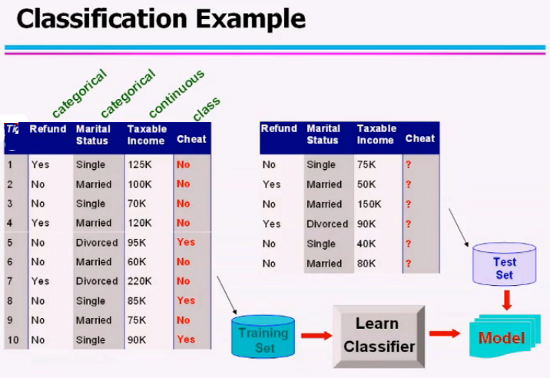

Example – Cheating on taxes

The figure above shows an example of a classificator that predicts if a person cheats on taxes.

Each record of the training set has the following attributes:

- Tid – transaction id

- Refund – it’s a boolean value that indicates if the person requested a refund

- Taxable Income

- Cheat

The attribute cheat is the class label, the classifier tries to predict its value. From the training set, the classifier learns the model. Afterwards it takes a subset of the training set and it removes the value of the class label cheat (?). This subset is the test set. At the end it will run and compare the predicted value with the real one and it understands how much the model learnt is accurated.

Classification Techniques

- Decision Tree based methods

- Rule-based methods

- Memory based reasoning

- Naive Bayes and Bayesuan Belief Netowrks

- Support Vector Machines

References

the slide referenced by “Data Mining – Classification: Basic Concepts, Decision Trees, and Model Evaluation – Lecture Notes for Chapter 4 – Introduction to Data Mining by Tan, Steinbach, Kumar” e sono scaricabili a questo link.

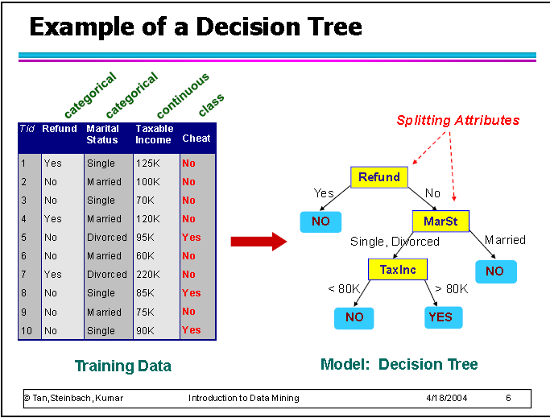

Decision Tree

The decision tree is a model used for classification. A decision tree is built analisyng the value of the attributes and it splits data set into branch-like segments.

After a test executed on a value of an attribute, the algorithm split data into branches.

Attraversing the decision tree from the root node to the leafs are the value of the label class. And the segment (or arcs) represents the outcome of the test on the attribute.

In each internal node of the tree is perfomed a test on an attribute of the record.

The tree leafs contain the class labels.

In the figure below is shown a possible decision tree which can be implemented to predict the issue of cheat the tax.

How the classifier works

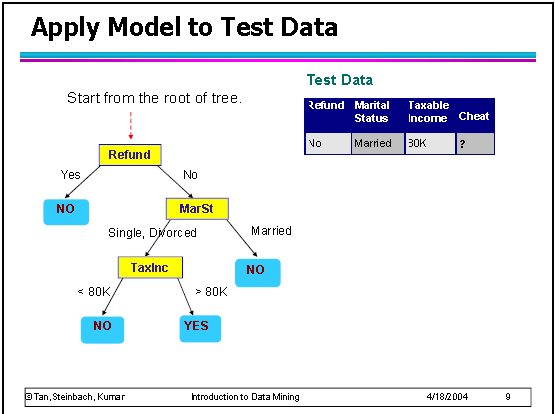

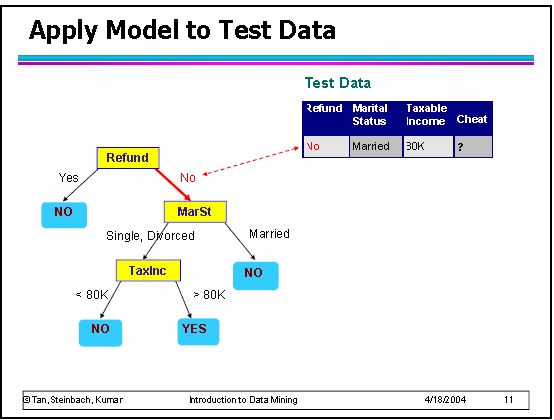

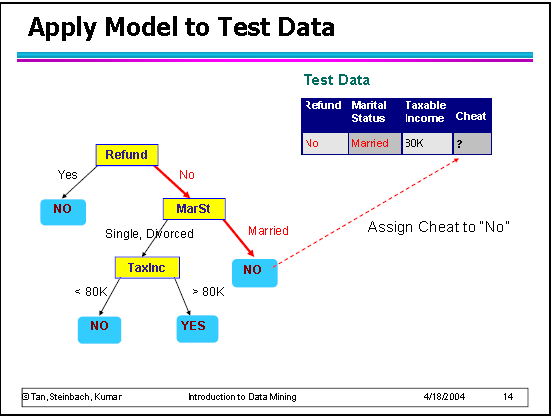

Let see how the classifier process an unseen record in order to predict the value of the cheat attribute.

In the figure, the unseen record is called “Test data” and it contains the following attributes:

- Refund: no

- Marital Status: Married

- Taxable Income: 30K

- Cheat: ?

Starting from the root, the classifier performs a test on the attribute “Refund” which value is equal to “No”. Thus, it follows the right branch and it reachs the node “Marital Status”.

At this point, the classifier performs a test on the attribute Marital Status which value is equal to Married, thus, it follows the right branch again.

The classifier reached a leaf node in which the class label is equal to “No”.

Therefore, the person correlated to the “test data record” is not cheating on taxes.

How to build a decision tree

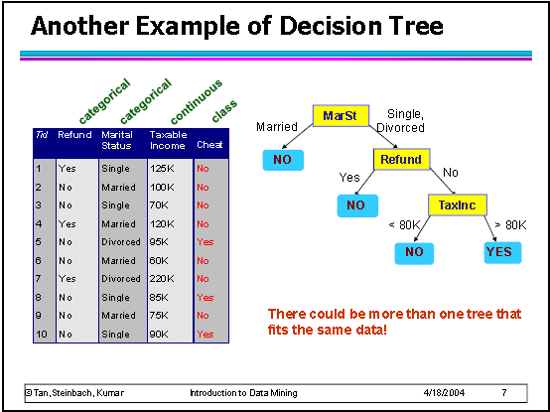

The criticity is that starting from the data stored in the training set is possible to build several decision trees. Which one is the best?

For instance, an other possible decision tree for predicting the value of the cheat class label is those in which the root node is represented by Marital Status, the the tree split by Refund and then for Taxable Income.

The new computed decision tree is shown in the figure:

It turns out that is impossible to try all the solutions space in order to find the most accurate decision tree.

So, how to find a “good” tree?

There are severl algorithms to deduct a decision tree from the training set:

- Hunt’s Algorithm

- CART

- ID3, C4.5

- SLIQ,SPRINT

For instance, the Hunt’s algorithm is the easiest. It is based on a greedy strategy because it selects the attribute that locally in the node generates the purer partitions.

The term purer partion refers to partitions which contain all records of the same class.

Only on the root node it selects the best attribute.

Hunt’s algorithm grows a decision tree in a recursive fashion by partitioning the trainig records into successively purer subsets

How to evaluate if a node is pure.

The measurment of node impurity/purity are:

- GINI INDEX

- ENTROPY

- MISCLASSIFICATION ERROR

Decision Tree Advantagies

- They are easy to build.

- The classifier is fast

- The model generated is interpretable

- The accurancy is good if the data set isn’t too complex

Decision Tree Disadvantagies

Decision Tree could be affected by the overfitting phenomenon. It occurs when it generats tree too complex and deep which try to separate elements of differents classes.

The classifier becomes too specialized. So it is necessary to semplify the tree with pre-pruning and post-pruning techniques.

Weka: Waikato Environment for Knowledge Analysis

Weka is a collection of machine learning algorithms for data mining tasks..

With WEKA is possible to use data mining algorithms written in java to do:

- Preprocessing

- Classifications – via Decision Tree J48

- Clustering

- Assosacition Rules

The algorithms can be called:

- via a command line

- via GUI

- via a call on a java program